The Next Frame: Predicting the Future of Act Motion AI Beyond Runway and Digen.ai

This article looks beyond the current capabilities of today's tools to explore the imminent future of generative video, forecasting upcoming features, market trends, and the ethical frontiers we are rapidly approaching.

1. Introduction: The Exponential Curve

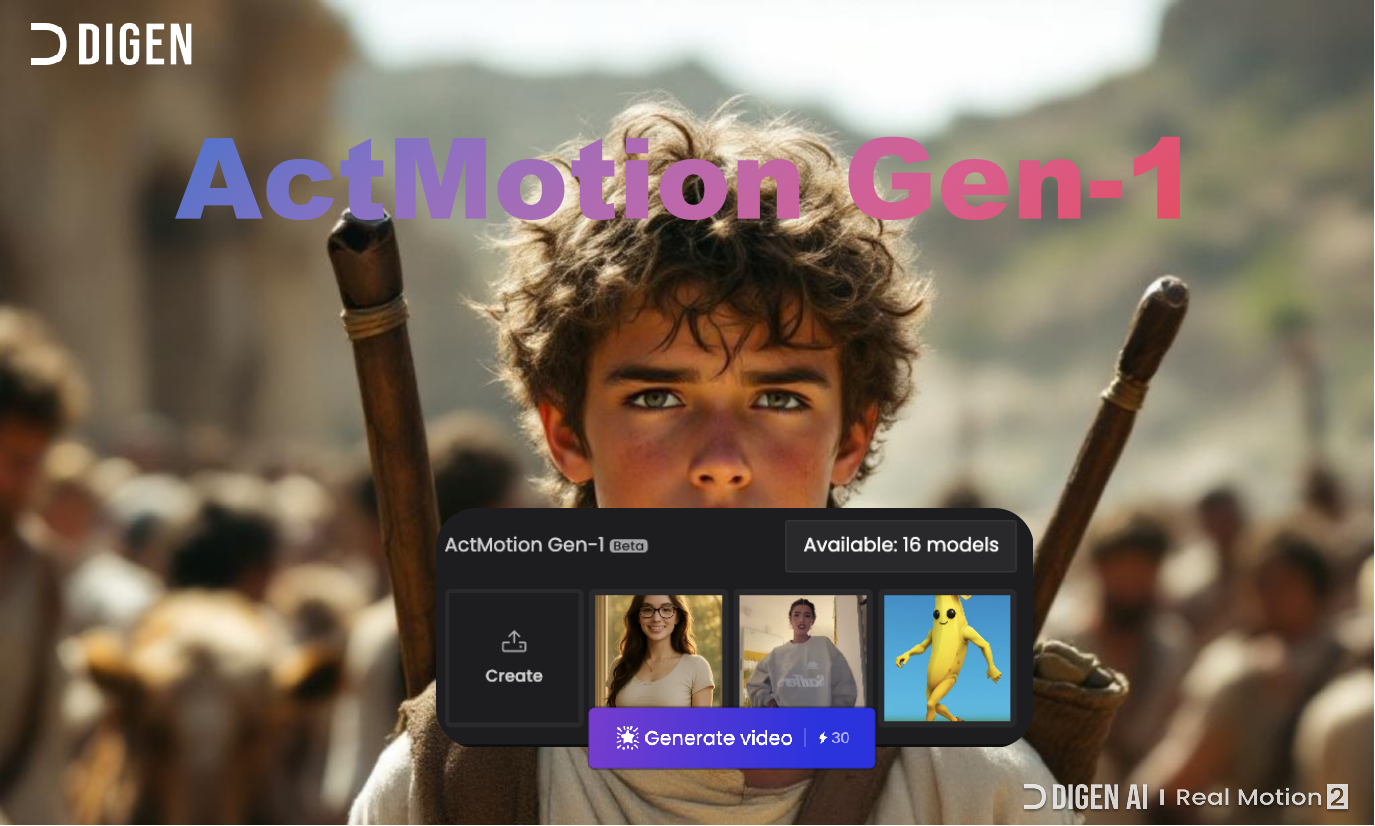

The current state of Act Motion AI, as demonstrated by Runway and Digen.ai, is not the endpoint but a fascinating waypoint on an exponential curve of development. Where do we go from here?

2. Technical Frontiers on the Horizon

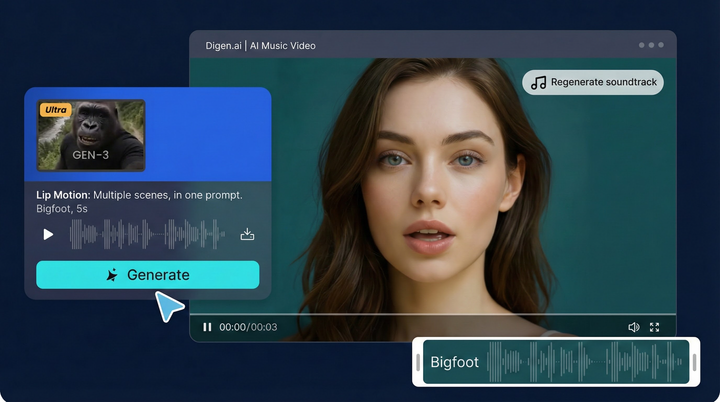

- Multi-Modal Fusion: The next generation of tools will seamlessly combine the strengths of both platforms. Imagine recording a single performance that simultaneously drives a character's facial expressions and full-body motion with equal fidelity.

- Real-Time Generation: The move from asynchronous generation (waiting minutes for a result) to real-time animation. This will unlock live streaming with AI avatars that react in real-time to chat and game events.

- Emotional Control Layers: Future interfaces will include direct "emotion sliders" (e.g., increase sadness to 70%, reduce anger to 20%) to fine-tune a performance beyond what was in the driver video.

- 3D Asset Output: Instead of just a 2D video, tools will generate fully rigged 3D character models and motion files (.fbx, .glTF) usable in game engines like Unity and Unreal, bridging the gap between content creation and interactive media.

3. The Evolving Creator Landscape

- The "Director" Subscription: Platforms may offer tiers where creators subscribe not to a tool, but to a library of AI "actors" with specific skills and looks, licensing their performance for videos.

- AI-Powered Content Agencies: The rise of agencies that specialize in producing AI-generated content at scale for brands, leveraging these tools to run entire animated marketing campaigns with tiny teams and budgets.

- The Copyright Reckoning: The industry will face complex legal battles defining the copyright of AI-generated performances, the rights of individuals whose likenesses (even stylized) are used, and the fair use of copyrighted characters as input.

4. Ethical and Societal Implications

- Hyper-Realistic Deepfakes: The line between animation and reality will blur to invisibility. This necessitates the development of even more robust and mandatory content provenance standards (e.g., C2PA) to maintain trust in digital media.

- Identity and Authenticity: As creators increasingly operate through AI avatars, questions about authenticity, mental health, and the disconnect between creator and persona will become central topics of discussion.

- Accessibility Revolution: These technologies will become powerful tools for accessibility, allowing individuals to create expressive content regardless of physical limitations, and giving everyone a voice in the digital landscape.

5. Conclusion: The Human Element Endures

The tools will become faster, cheaper, and more powerful. They will handle increasingly complex tasks. But the core of creation will remain human: the spark of an idea, the taste to guide the AI, the judgment to edit its output, and the courage to share a unique perspective with the world. The future of Act Motion AI is not about replacing creators; it's about empowering them to tell stories we can currently only imagine.

Maintaining perfect character consistency across frames is the holy grail of AI video. While text-based models like Runway Gen-2 can struggle with flickering features, Digen.ai ActMotion excels. Because it starts with a single, static source image, the character's identity—face, hair, clothing, style—is permanently locked. Only the pose changes, guaranteeing flawless consistency from the first frame to the last, whether you're using an anime character or a photorealistic human.

Keywords: #CharacterConsistency #AIAnimation #DigitalHumans #VFX #AIVideoQuality

Achieve perfect character consistency. Test Digen.ai ActMotion on your own image.

Comments ()