Digen.ai Unveils Lip Motion Gen-3: Next-Gen Audio-Driven Single-Character Video Synthesis—Ushering in a New Era of Hyper-Realistic Digital Humans

Digen.ai proudly introduces Lip Motion Gen-3, a next-generation framework for multi-character conversational video synthesis driven entirely by audio input.

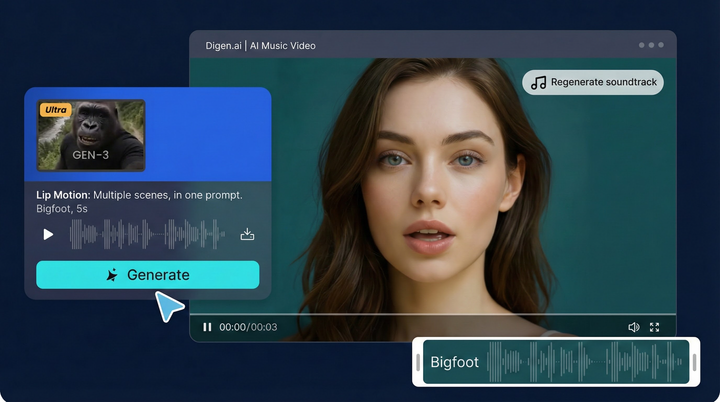

Digen.ai proudly introduces Lip Motion Gen-3, a groundbreaking AI model designed to generate high-quality single-character videos using only audio input. Leveraging our proprietary L-ROPE method—which integrates adaptive character positioning and semantic-aware label encoding—Gen-3 achieves remarkable improvements in lip-sync accuracy and facial expressiveness.

Combined with a partial-parameter fine-tuning strategy and multi-task learning framework, Gen-3 delivers exceptional instruction adherence and high-fidelity video output, even under limited computational resources.

From virtual YouTubers and educational content to personalized messaging and game character animation, Gen-3 opens up new possibilities for immersive digital storytelling. We are committed to making professional-quality avatar video generation more accessible and efficient than ever.

Although challenges remain in bridging the gap between synthetic and real voice audio, Gen-3 represents a significant leap toward photorealistic and emotionally responsive AI-generated video.

🏆 Industry-Leading Benchmark Performance

Gen-3 was rigorously evaluated across multiple internationally recognized datasets:

- Talking Head Datasets: HDTF, CelebV-HQ

- Talking Body Datasets: EMTDT

Comprehensive metrics were used:

- FID / FVD for visual quality

- E-FID for facial naturalness

- Sync-C / Sync-D for audio-lip synchronization

In comparative tests against leading solutions like AniPortrait, VExpress, and EchoMimic, Gen-3 outperformed competitors across key metrics—especially in lip-sync precision and video clarity.

Qualitative results further demonstrate Gen-3’s ability to interpret complex prompts such as “a man closing his laptop” or “a woman putting on headphones and smiling,” generating smooth motions with minimal visual artifacts.

🧠 Technical Innovations

- Architecture: Built on a Diffusion-in-Transformer (DiT) backbone with integrated 3D-VAE for efficient spatiotemporal compression.

- Multi-Modal Conditioning: Text prompts are encoded into embeddings, while CLIP-extracted image features provide contextual guidance via decoupled cross-attention.

- Audio-Visual Fusion: Gen-3 natively supports audio conditioning through specialized cross-attention layers. Wav2Vec-extracted audio embeddings are aligned with video latent frames using a novel audio adapter, resolving temporal mismatches seamlessly.

This end-to-end design ensures superior lip-sync quality and expressive consistency, setting a new standard in AI-driven digital human synthesis.

📈 Real-World Results & Use Cases

Gen-3 dominates evaluations across metrics including:

- ✅ FID/FVD (Visual Quality)

- ✅ E-FID (Facial Expression)

- ✅ Sync-C/Sync-D (Lip-Sync Accuracy)

🛠️ Generate Videos in 3 Easy Steps:

- Upload a character image or choose a template

Supports single-angle portraits or pre-built templates. - Input audio or text

Upload voice recordings or enter text for auto TTS conversion. - Generate & export in HD

Produce studio-quality videos in multiple resolutions and formats.

Lip Motion

🌍 Ideal Applications:

- 📱 Short-format video & animation

- 🔴 Virtual streaming & real-time avatars

- 🎓 E-learning & personalized educational content

- 🎮 Game cinematics & interactive NPC dialogues

- 💼 Corporate promotions & dynamic product presentations

🚀 What’s Next?

We continue to enhance realism and multi-modal controllability to make AI video creation even more intuitive and powerful.

👉 Try Lip Motion Gen-3 Today!

Create engaging, lip-synced digital avatar videos in minutes.

Visit now: Digen.ai Lip Motion Gen-3 Official Page

#AIVideoGenerator #DigitalHuman #LipSyncAI #AvatarAnimation #AIContentCreator

Comments ()